A short while ago, I asked a bunch of people for long-term touchpad

usage data (specifically: evemu recordings). I currently have 25 sets of data, the shortest of which has

9422 events, the longest of which has 987746 events. I requested that

evemu-record was to be run in the background while people use their touchpad normally. Thus the

data is quite messy, it contains taps, two-finger scrolling, edge scrolling,

palm touches, etc. It's also raw data from the touchpad, not processed by libinput. Some care has to be taken with analysis, especially since

it is weighted towards long recordings. In other words, the user with 987k

events has a higher influence than the user with 9k events. So the data is useful for looking for patterns that can be

independently verified with other data later. But it's also useful for

disproving hypothesis, i.e. "we cannot do $foo because some users' events show $bla".

One of the things I've looked into was tapping.

In libinput, a tap has two properties: a time threshold and a movement

threshold. If the finger is held down longer than 180ms

or it moves more than 3mm it is not a tap. These

numbers are either taken from synaptics or just guesswork (both, probably).

The need for a time-based threshold is obvious: we don't know whether the user is

tapping until we see the finger up event. Only if that doesn't happen within

a given time we know the user simply put the finger down. The movement

threshold is required because small movements occur while tapping, caused by

the finger really moving (e.g. when tapping shortly before/after a pointer

motion) or by the finger center moving (as the finger

flattens under pressure, the center may move a bit). Either way, these

thresholds delay real pointer movement, making the pointer less reactive

than it could be. So it's in our interest to have these thresholds low to

get reactive pointer movement but as high as necessary to

have reliable tap detection.

General data analysis

Let's look at the (messy) data. I wrote a script to calculate the time delta and

movement distance for every single-touch sequence, i.e. anything with two or

more fingers down was ignored. The script used a range of 250ms and

6mm of movement, discarding any sequences outside those thresholds. I also

ignored anything in the left-most or right-most 10% because it's likely that

anything that looks like a tap is a palm interaction [1]. I ran the script

against those files where the users reported that they use tapping (10

users) which gave me 6800 tap sequences. Note that the ranges are purposely

larger than libinput's to detect if there was a significant amount of attempted taps

that exceed the current thresholds and would be misdetected as non-taps.

Let's have a look at the results. First, a simple picture that merely prints

the start location of each tap, normalised to the width/height of the

touchpad. As you can see, taps are primarily clustered around the center but

can really occur anywhere on the touchpad. This means any attempt at

detecting taps by location would be unreliable.

Normalized distribution of touch sequence start points (relative to touchpad width/height)

Normalized distribution of touch sequence start points (relative to touchpad width/height)

You can easily see the empty areas in the left-most and right-most 10%, that is an artefact of the filtering.

The analysis of time is more interesting: There are spikes around the 50ms

mark with quite a few outliers going towards 100ms forming what looks like a

narrow normal distribution curve. The data points are overlaid with markers

for the mean [2], the 50 percentile, the 90 percentile

and the 95 percentile [3]. And the data says: 95% of events fall below

116ms. That's something to go on.

Times between touch down and touch up for a possible tap event.

Times between touch down and touch up for a possible tap event.

Note that we're using a 250ms timeout here and thus even look at touches

that would not have been detected as tap by libinput. If we reduce to the

180ms libinput uses, we get a 95% percentile of 98ms, i.e. "of all taps currently detected as taps, 95% are 98ms or shorter".

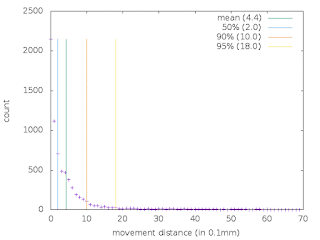

The analysis of distance is similar: Most of the tap sequences have little

to no movement, with 50% falling below 0.2mm of movement. Again the

data points are overlaid with markers for the mean, the 50 percentile,

the 90 percentile and the 95 percentile. And the data says: 95% of events

fall below 1.8mm. Again, something to go on.

Movement between the touch down and the touch up event for a possible tap (10 == 1mm)

Movement between the touch down and the touch up event for a possible tap (10 == 1mm)

Note that we're using a 6mm threshold here and thus even look at touches

that would not have been detected as tap by libinput. If we reduce to the

3mm libinput uses, we get a 95% percentile of 1.2mm, i.e. "of all taps currently detected as taps, 95% move 1.2mm or less".

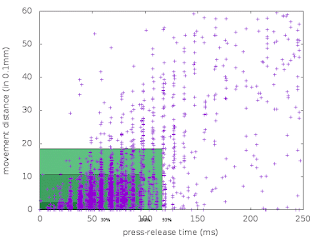

Now let's combine the two. Below is a graph mapping times and distances from

touch sequences. In general, the longer the time, the longer the more

movement we get but most of the data is in the bottom left. Since doing

percentiles is tricky on 2 axes, I mapped the respective axes individually.

The biggest rectangle is the 95th percentile for time and distance, the

number below shows how many data points actually fall into this rectangle.

Looks promising, we still have a vast majority of touchpoints fall into

the respective 95 percentiles though the numbers are slightly lower than the individual axes

suggest.

Time to distance map for all possible taps

Time to distance map for all possible taps

Again, this is for the 250ms by 6mm movement. About 3.3% of the events fall into the area

between 180ms/3mm and 250ms/6mm. There is a chance that some of the touches have have been short, small movements, we

just can't know by from data.

So based on the above, we learned one thing: it would not be reliable to

detect taps based on their location. But we also suspect two things now: we

can reduce the timeout and movement threshold without sacrificing a lot of

reliability.

Verification of findings

Based on the above, our hypothesis is: we can reduce the timeout to 116ms

and the threshold to 1.8mm while still having a 93% detection reliability.

This is the most conservative reading, based on the extended thresholds.

To verify this, we needed to collect tap data from multiple users in a

standardised and reproducible way. We wrote a basic website that displays

5 circles (see the screenshot below) on a canvas and asked a bunch of co-workers in two

different offices [4] to tap them. While doing so, evemu-record was running in

the background to capture the touchpad interactions. The touchpad was the

one from a Lenovo T450 in both cases.

Screenshot of the <canvas> that users were asked to perform the taps on.

Screenshot of the <canvas> that users were asked to perform the taps on.

Some users ended up clicking instead of tapping and we had to discard

those recordings. The total number of useful recordings was 15 from the

Paris office and 27 from the Brisbane office. In total we had 245 taps (some

users missed the circle on the first go, others double-tapped).

We asked each user three questions: "do you know what tapping/tap-to-click

is?", "do you have tapping enabled" and "do you use it?". The answers are

listed below:

- Do you know what tapping is? 33 yes, 12 no

- Do you have tapping enabled? 19 yes, 26 no

- Do you use tapping? 10 yes, 35 no

I admit I kinda screwed up the data collection here because it includes

those users whose recordings we had to discard. And the questions could've been better. So I'm not going to go into

too much detail. The only useful thing here though is: the majority of users

had tapping disabled and/or don't use it which should make any potential

learning effect disappear[5]

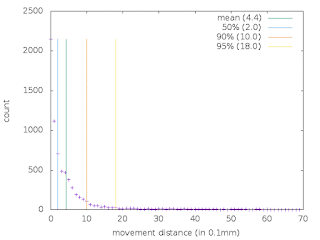

Ok, let's look at the data sets, same scripts as above:

Times between touch down and touch up for tap events

Times between touch down and touch up for tap events

Movement between the touch down and the touch up events of a tap (10 == 1mm)

Movement between the touch down and the touch up events of a tap (10 == 1mm)

95th percentile for time is 87ms. 95th percentile for distance is 1.09mm.

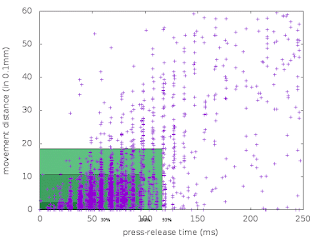

Both are well within the numbers we expected we saw above. The combined

diagram shows that 87% of events fall within the 87ms/10.9mm box.

Time to distance map for all taps

Time to distance map for all taps

The few outliers here are close enough to the edge that expanding the box to

to 100ms/1.3mm we get more than 95%. So it appears that our hypothesis is

correct, reducing the timeout to 116ms and 1.8mm will have a 95% detection

reliability. Furthermore, using the clean data it looks like we can use a

lower threshold than previously assumed and still get a good detection

ratio. Specifically, data collected in a controlled environment across 42 different users of varying familiarity with touchpad tapping shows that 100ms and 1.3mm gets us a 95% detection rate of taps.

What does this mean for users?

Based on the above, the libinput thresholds will be reduced to 100ms and 1.3mm.

Let's see how we go with this and then we can increase it in the

future if misdetection is higher than expected. Patches will on the

wayland-devel list shortly.

For users that don't have tapping enabled, this will not change anything.

All users who have tapping enabled will see a more responsive cursor on small

movements as the time and distance thresholds have been significantly

reduced. Some users may see a drop in tap detection rate. This is

hopefully a subconscious enough effect that those users learn to tap faster

or with less movement. If not, we have to look at it separately and see how

we can deal with that.

If you find any issues with the analysis above, please let me know.

[1] These scripts analyse raw touchpad data, they don't benefit from

libinput's palm detection

[2] Note: mean != average, the mean is less affected by strong outliers.

look it up, it's worth knowing

[3] X percentile means X% of events fall below this value

[4] The Brisbane and Paris offices. No separate analysis was done, so it is unknown whether close proximity to

baguettes has an effect to tap behaviour

[5] i.e. the effect of users learning how to use a system that doesn't work

well out-of-the-box. This may result in e.g. quicker taps from those that

are familiar with the system vs those that don't.