Last November, Jonas Ådahl sent an RFC to the wayland-devel list about a common library to handle input devices in Wayland compositors called libinput. Fast-forward and we are now at libinput 0.6, with a broad support of devices and features. In this post I'll give an overview on libinput and why it is necessary in the first place. Unsuprisingly I'll be focusing on Linux, for other systems please mentally add the required asterisks, footnotes and handwaving.

The input stack in X.org

The input stack as it currently works in X.org is a bit of a mess. I'm not even talking about the different protocol versions that we need to support and that are partially incompatible with each other (core, XI 1.x, XI2, XKB, etc.), I'm talking about the backend infrastructure. Let's have a look:

The graph above is a simplification of the input stack, focusing on the various high-level functionalities. The X server uses some device discovery mechanism (udev now, previously hal) and matches each device with an input driver (evdev, synaptics, wacom, ...) based on the configuration snippets (see your local /usr/share/X11/xorg.conf.d/ directory).

The idea of having multiple drivers for different hardware was great when hardware still mattered, but these days we only speak evdev and the drivers that are hardware-specific are racing the dodos to the finishing line.

The drivers can communicate with the server through the very limited xf86 DDX API, but there is no good mechanism to communicate between the drivers. It's possible, just not doable in a sane manner. Most drivers support multiple X server releases so any communication between drivers would need to take version number mixes into account. Only the server knows the drivers that are loaded, through a couple of structs. Knowledge of things like "which device is running synaptics" is obtainable, but not sensibly. Likewise, drivers can get to the drivers of other devices, but not reasonably so (the API is non-opaque, so you can get to anything if you find the matching header).

Some features are provided by the X server: pointer acceleration, disabling a device, mapping a device to monitor, etc. Most other features such as tapping, two-finger scrolling, etc. are provided by the driver. That leads to an interesting feature support matrix: synaptics and wacom both provide two-finger scrolling, but only synaptics has edge-scrolling. evdev and wacom have calibration support but they're incompatible configuration options, etc. The server in general has no idea what feature is being used, all it sees is button, motion and key events.

The general result of this separation is that of a big family gathering. It looks like a big happy family at first, but then you see that synaptics won't talk to evdev because of the tapping incident a couple of years back, mouse and keyboard are have no idea what forks and knives are for, wacom is the hippy GPL cousin that doesn't even live in the same state and no-one quite knows why elographics keeps getting invited. The X server tries to keep the peace by just generally getting in the way of everyone so no-one can argue for too long. You step back, shrug apologetically and say "well, that's just how these things are, right?"

To give you one example, and I really wish this was a joke: The server is responsible for button mappings, tapping is implemented in synaptics. In order to support left-handed touchpads, gnome-settings-daemon sets the button mappings on the device in the server. Then it has to swap the tapping actions from left/right/middle for 1/2/3-finger tap to right/left/middle. That way synaptics submits right button clicks for a one-finger tap, which is then swapped back by the server to a left click.

The X.org input drivers are almost impossible to test. synaptics has (quick guesstimate done with grep and wc) around 70 user-configurable options. Testing all combinations would be something around the order of 10101 combinations, not accounting for HW differences. Testing the driver on it's own is not doable, you need to fire up an X server and then run various tests against that (see XIT). But now you're not testing the driver, you're testing the whole stack. And you can only get to the driver through the X protocol, and that is 2 APIs away from the driver core. Plus, test results get hard to evaluate as different modules update separately.

So in summary, in the current stack features are distributed across modules that don't communicate with each other. The stack is impossible to test, partially thanks to the vast array of user-exposed options. These are largely technical issues, we control the xf86 DDX API and can break it when needed to, but at this point you're looking at something that resembles a rewrite anyway. And of course, don't you dare change my workflow!

The input stack in Wayland

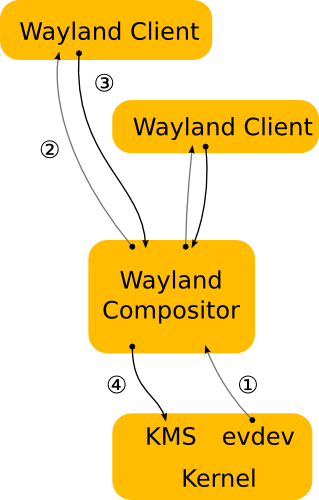

From the input stack's POV, Wayland simply merges the X server and the input modules into one item. See the architecture diagram from the wayland docs:

evdev gets fed into the compositor and wayland comes out the other end. If life were so simple... Due to the X.org input modules being inseparable from the X server, Weston and other compositors started implementing their own input stack, separately. Let me introduce a game called feature bingo: guess which feature is currently not working in $COMPOSITOR. If you collect all five in a row, you get to shout "FFS!" and win the price of staying up all night fixing your touchpad. As much fun as that can be, maybe let's not do that.libinput

libinput provides a full input stack to compositors. It does device discovery over udev and event processing and simply provides the compositor with the pre-processed events. If one of the devices is a touchpad, libinput will handle tapping, two-finger scrolling, etc. All the compositor needs to worry about is moving the visible cursor, selecting the client and converting the events into wayland protocol. The stack thus looks something like this:

Almost everything has moved into libinput, including device discovery and pointer acceleration. libinput has internal backends for pointers, touchpads, tablets, etc. but they are not exposed to the compositor. More importantly, libinput knows about all devices (within a seat), so cross-device communication is possible but invisible to the compositor. The compositor still does configuration parsing, but only for user-specific options such as whether to enable tapping or not. And it doesn't handle the actual feature, it simply tells libinput to enable or disable it.The graph above also shows another important thing: libinput provides an API to the compositor. libinput is not "wayland-y", it doesn't care about the Wayland protocol, it's simply an input stack. Which means it can be used as base for an X.org input driver or even Canonical's MIR.

libinput is very much a black box, at least compared to X input drivers (remember those 70 options in synaptics?). The X mantra of "mechanism, not policy" allows for interesting use-cases, but it makes the default 90% use-case extremely painful from a maintainer's and integrator's point of view. libinput, much like wayland itself, is a lot more restrictive in what it allows, specifically in the requirement it places on the compositor. At the same time aims for a better out-of-the-box experience.

To give you an example, the X.org synaptics driver lets you arrange the software buttons more-or-less freely on the touchpad. The default placement is simply a config snippet. In libinput, the software buttons are always at the bottom of the touchpad and also at the top of the touchpad on some models (Lenovo *40 series, mainly). The buttons are of a fixed size (which we decided on after analysing usage data), and you only get a left and right button. The top software buttons have left/middle/right matching the markings on the touchpad. The whole configuration is decided based on the hardware. The compositor/user don't have to enable them, they are simply there when needed.

That may sound restrictive, but we have a number of features on top that we can enable where required. Pressing both left and right software buttons simultaneously gives you a middle button click; the middle button in the top software button row provides wheel emulation for the trackstick. When the touchpad is disabled, the top buttons continue to work and they even grow larger to make them easier to hit. The touchpad can be set to auto-disable whenever an external mouse is plugged in.

And the advantage of having libinput as a generic stack also results in us having tests. So we know what the interactions are between software buttons and tapping, we don't have to wait for a user to trip over a bug to tell us what's broken.

Summary

We need a new input stack for Wayland, simply because the design of compositors in a Wayland world is different. We can't use the current modules of X.org for a number of technical reasons, and the amount of work it would require to get those up to scratch and usable is equivalent to a rewrite.

libinput now provides that input stack. It's the default in Weston at the time of this writing and used in other compositors or in the process of being used. It abstracts most of input away and more importantly makes input consistent across all compositors.

17 comments:

Dude, you just broke my workflow.

A true middle-click is a _must_ for modern web-browsers. And no, both-buttons-click is as braindead is it was on a mouse.

So at least provide some way to set up the middle-button placement. Even Mac OS X allows that!

What I am missing is:

* Scrolling for a TrackPoint

* Scrolling on the side of a TouchPad

Two-finger scrolling is really awkward.

Are there any near-future plans to support CEC over HDMI as an input source?

Does it support >8bit keycodes for both Wayland and X.Org?

Microsoft Ergonomic Desktop 4000 has few keys with >8bit keycodes which work fine on text console (evtest) and fail to be recognized in X.Org (xev).

Everybody is linking the "X in SteamOS" article, and I can't read it yet... being a poor student is not cool ;)

Great article, but I am curious if game controllers also fit in libinput.

Marcin: It supports extended keycodes yes, but only for Wayland. X.Org servers will just drop high keycodes, because it was deemed to be far too difficult to get high keycodes whilst retaining backwards compatibility with classic input (xmodmap et al) as well as XKB. Part of the problem here is that those 8-bit keycodes are part of Xlib API/ABI, so we can never break them, but still have to expose the entire map. As XKB is so complex, the combinatorial explosion of corner cases kills us. We sat down a while ago and worked out exactly how to fix it, and it fell firmly in the 'too hard' basket.

Taking away my middle button is definitely a case of http://xkcd.com/1172/...

Fred Morcos: that's kernel territory, by the time libinput gets to see it everything is evdev.

Marcin Juszkiewicz: as daniels said, Wayland only. the keycode limitation is imposed by the X protocol, not by the input stack itself (e.g. XI2 has 32 bit keycodes).

Peter: Are you sure this is the case? What is the need for libcec then?

What about ibus and input/compositing engines in general? How do they fit this picture?

Will we have again a situation in which some applications receive processed symbols from libinput and others receive their input via an input engine that, in turn, receives the raw keycode/scancodes from libinput/evdev?

Fred Morcos:

I use libcec-daemon for CEC input.

https://github.com/bramp/libcec-daemon

I use it with X.Org, but it should work with libinput too.

All very sensible, but allowing a separate touchpad mapping for the middle click is really vital - it's a very commonly used action in very commonly used software (web browsers for opening a new tab, and all sorts of text input boxes in toolkits that maintain different paste buffers for middle-click vs. ctrl-C). Emulating middle-click as left+right is only 90-95% reliable in my experience, and for something as vital as middle-click, that's not good enough.

gioele: libinput is two APIs away from any applications. any processing of key events á la ibus would be in the compositor/wayland protocol and that's above where libinput sits in the stack.

Hi Peter,

Very nice writeup.

Something that's a bit unclear to me was when you said "these days we only speak evdev and the drivers that are hardware-specific are racing the dodos to the finishing line". That seems to be saying tha we'll only have a single driver for all hardware, but I don't see how that can be the case. Did you mean that all drivers would now need to use the evdev protocol? If that's so, does that mean that drivers like wacom or synaptics would need to be rewritten, or do they already speak evdev?

Best/Liam

liam: wacom and synaptics already use evdev as the hardware protocol (and have done so for years). with very few exceptions we expect the kernel to have the hw drivers, present an evdev interface and we hook onto that in X/libinput.

exceptions are serial devices, but even for those we're using kernel drivers (through inputattach if need be) so really, there is no hw-specific driver anymore.

@Peter

Ah, I wasn't aware the drivers already spoke evdev.

Thanks very much for the clarification.

A nitpick: all combinations of 70 options is not 10¹⁰¹, but much less. It is the powerset of 70, and is equal to 2⁷⁰

Post a Comment